This page is moving to a new website.

Tag Archives: Data management

Recommended: When life gives you coloured cells, make categories

This page is moving to a new website.

Recommended: 9 Reasons Excel Users Should Consider Learning Programming

This page is moving to a new website.

Microsoft Excel is very popular, but it has many serious limitations. This article explains what you lose out on if you rely just on Excel and what additional capabilities that R and Python offer that allow you to do better work and do it more efficiently. Continue reading

Recommended: Use of Electronic Health Record Data in Clinical Investigations

This page is moving to a new website.

This press releases announces a “Guidance for Industry” document that the U.S. Food and Drug Administration provides from time to time on technical issues. This document discusses the use of the Electronic Health Record as an additional source of information for prospective clinical trials. Continue reading

Recommended: A crummy drop-down menu appeared to kill dozens of mothers in Texas.

This page is moving to a new website.

This article talks about how bad the maternal mortality rates are in the United States and how bad our effort to try to quantify the rate is. Continue reading

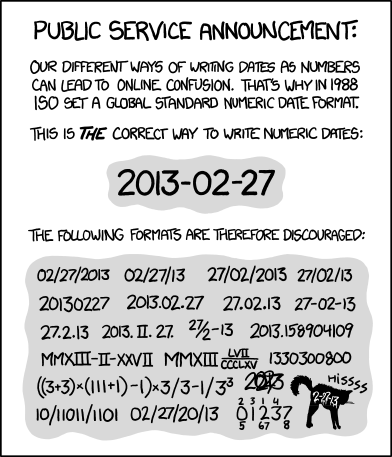

Recommended: ISO 8601

This page is moving to a new website.

This xkcd cartoon by Scott Munro is open source, so I can hotlink the image directly. But if you go to the source, https://xkcd.com/1179/, be sure to hover over the image for a second punch line.

Recommended: EuSpRIG horror stories.

This page is moving to a new website.

There has been a lot written about data management problems with using spreadsheets, and there is a group the European Spreadsheet Risks Interest Group that has documented the problem carefully and thoroughly. This page on their website lists the big, big, big problems that have occurred because of spreadsheet mistakes. Any program is capable of producing mistakes, of course, but spreadsheets are particularly prone to errors for a variety of reasons that this group documents. Continue reading

Recommended: The Reinhart-Rogoff error – or how not to Excel at economics

This page is moving to a new website.

There has been a lot written about how lousy Microsoft Excel (and other spreadsheet products) are at data management, but the warning sinks in so much more effectively when you can cite an example where the use of Excel leads to an embarrassing retraction. Perhaps the best example is the paper by Carmen Reinhart and Peter Rogoff where a major conclusion was invalidated when a formula inside their Excel spreadsheet accidentally included only 15 of the relevant 20 countries. Here’s a nice description of that event and some suggestions on how to avoid this in the future. Continue reading

Recommended: Data dictionaries

This page is moving to a new website.

This is a nice explanation of what goes into a data dictionary, written from the perspective of research data management. Continue reading

Recommended: OpenRefine: A free, open source, powerful tool for working with messy data

I have not had a chance to use this, but it comes highly recommended. OpenRefine is a program that uses a graphical user interface to clean up messy data, but it saves all the clean up steps to insure that your work is well documented and reproducible. I listed Martin Magdinier as the “author” in the citation below because he has posted most of the blog entries about OpenRefine, but there are many contributors to this package and website. Continue reading